Monash-Coles Grasping Dataset

Welcome to the Monash-Coles Grasping Dataset

A 6-DoF Robotic Grasping Dataset using objects from Coles supermarket. The dataset consists of 1500 grasps of 20 unique objects (75 grasps each). For each object, 25 grasps were attempted whilst in an upright position and the remaining 50 were attempted from random positions.

The dataset was created to assist in the development of data-driven robotic grasping approaches.

You can download the dataset HERE.

Download Instructions

- Download all data zip files

- Unzip all folders to the same location as unpack_data.py

- To combine point cloud data into one folder and visualize the data, run

python unpack_data.py

You can download python unpack_data.py HERE.

NOTE: This requires cv2, open3d and rospy_message_converter to be installed

The 3D object models used in this grasping dataset can be found HERE.

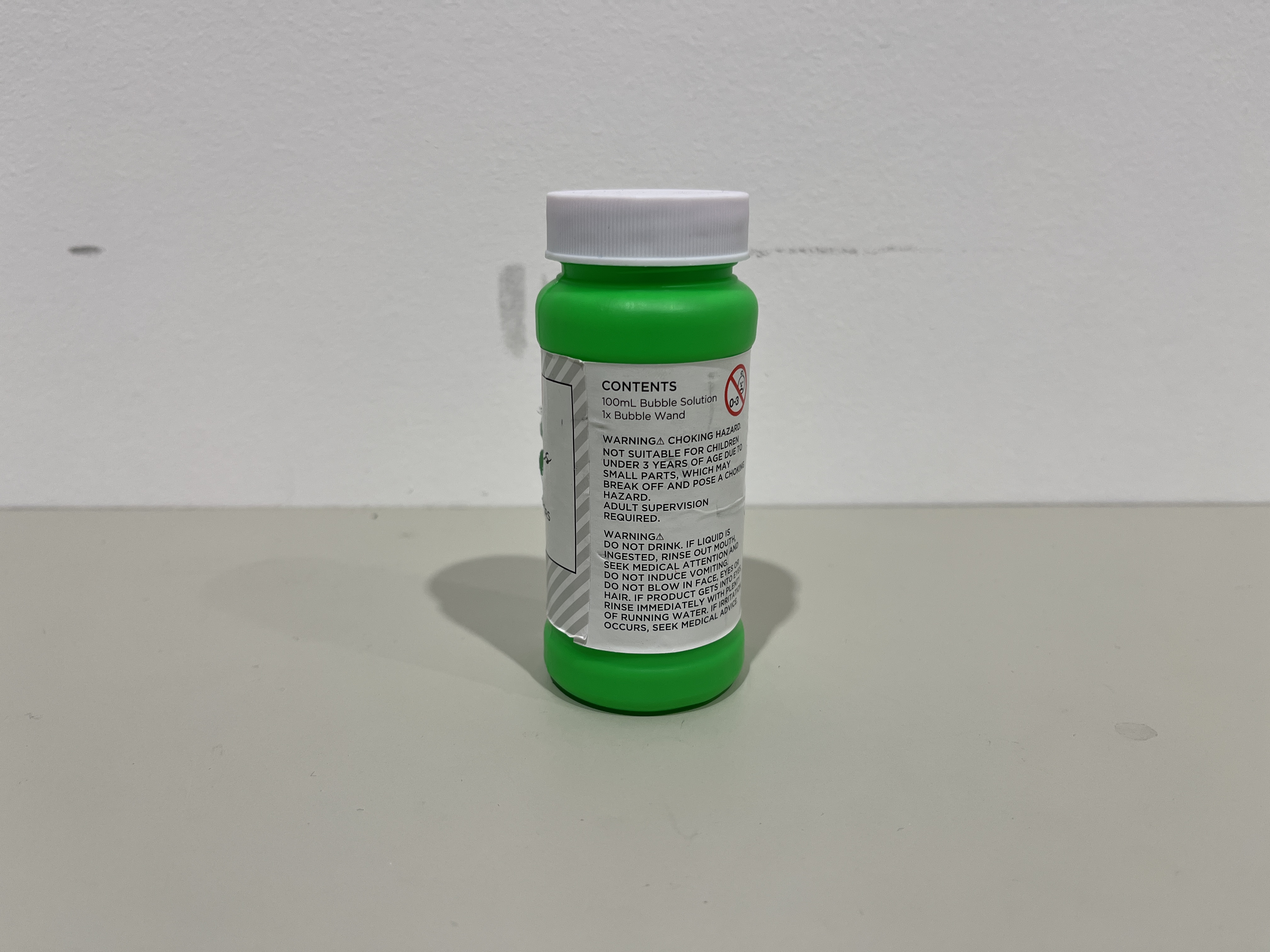

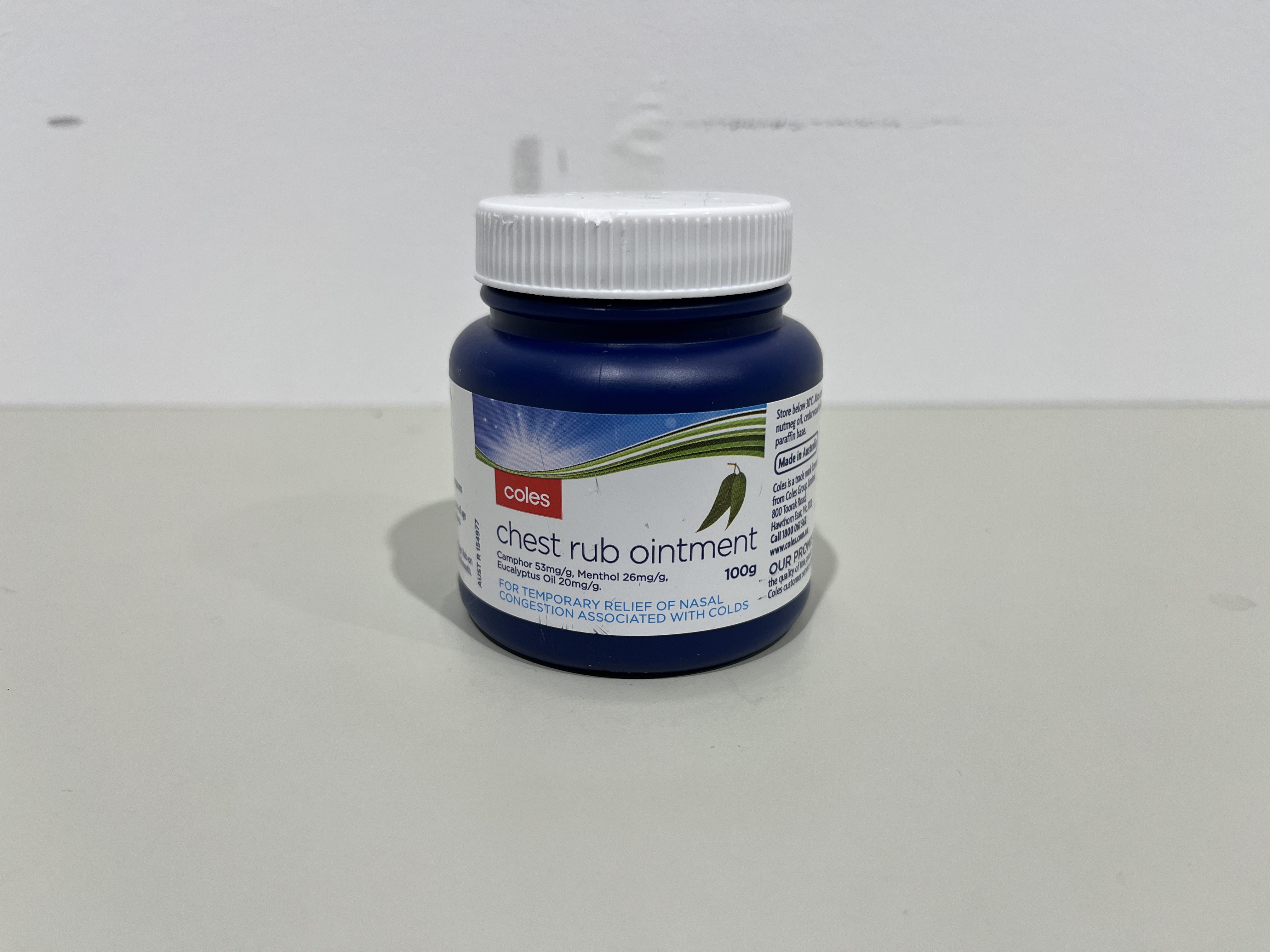

The Objects

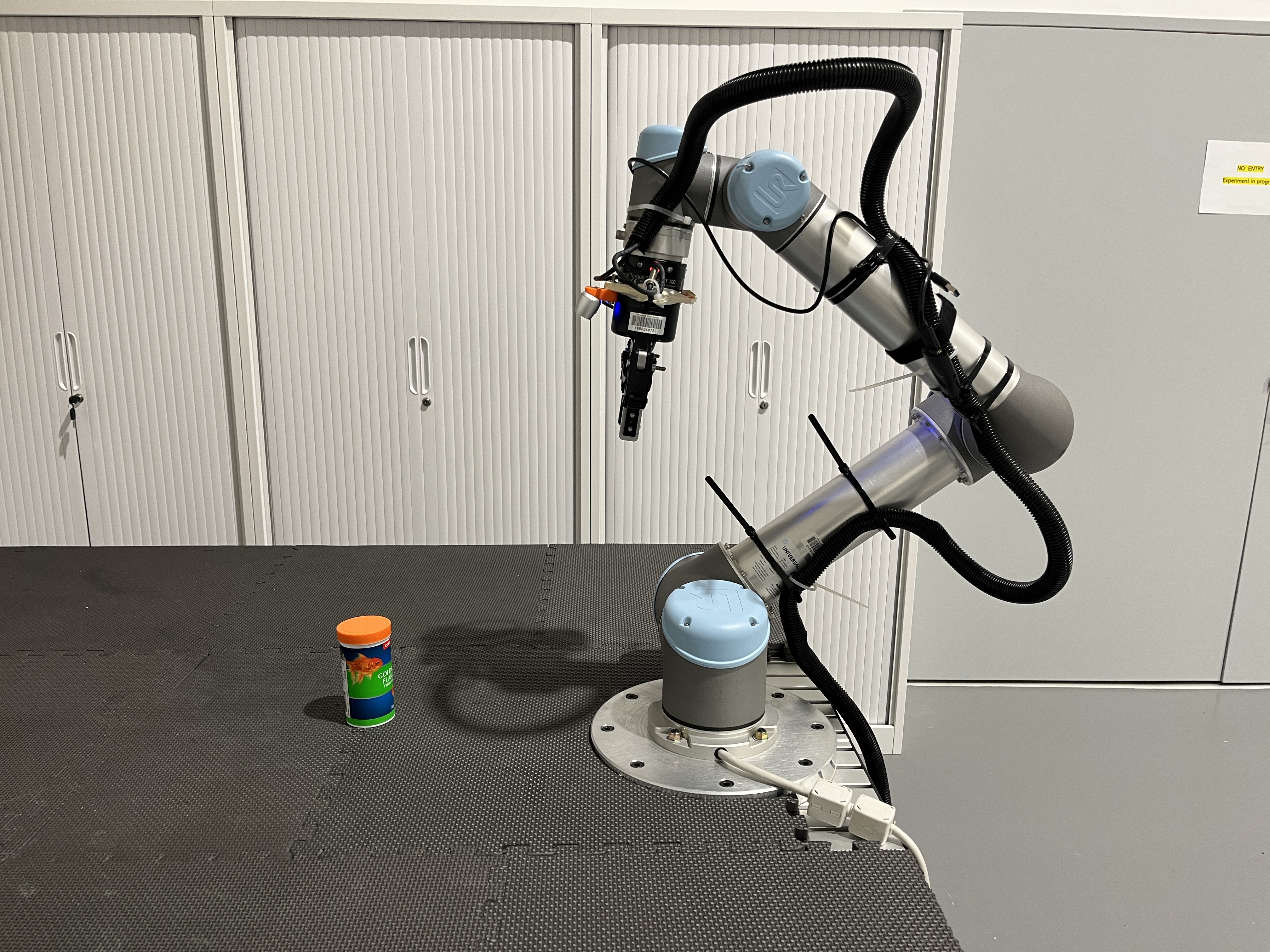

The Method

A Realsense D435i was mounted on a UR5 Robotic arm and a single object was placed in front of it. We then utilised the agilegrasp [1] algorithm to find a grasp pose. The grasp pose was chosen based on its quality, given by the agilegrasp algorithm to encourage a 50% success rate. Once the grasp pose was selected the robot would firstly move to an offset position, 10cm from the grasp pose in the same orientation. Then it would move to the grasp pose along the given orientation and stop before closing the gripper. It would then life the object vertically upwards by 15cm and rotate the elbow such that it pointing downwards and perpindiular to the table. If the object it still in the gripper after reaching this position, the grasp would be deemed a “success”. If the grasp was deemed successful, the robot would perform a stability check by rotating its elbow joint by 90 degrees such that the the gripper was parrallel to the table. It would then rotate back to its original positon and check if the object was still in the gripper, if so it would be deemed a “stable success”.

For each grasp attempt the following is saved:

- Point Cloud

- RGB Image

- Depth Image

- Transform from “base_link” to “camera_link”

- All static and dynamic transforms

- Grasp pose (in “base_link” frame)

- Robot pose (in “base_link” frame)

- Success (Binary)

- Stable Success (Binary)

Robot Setup

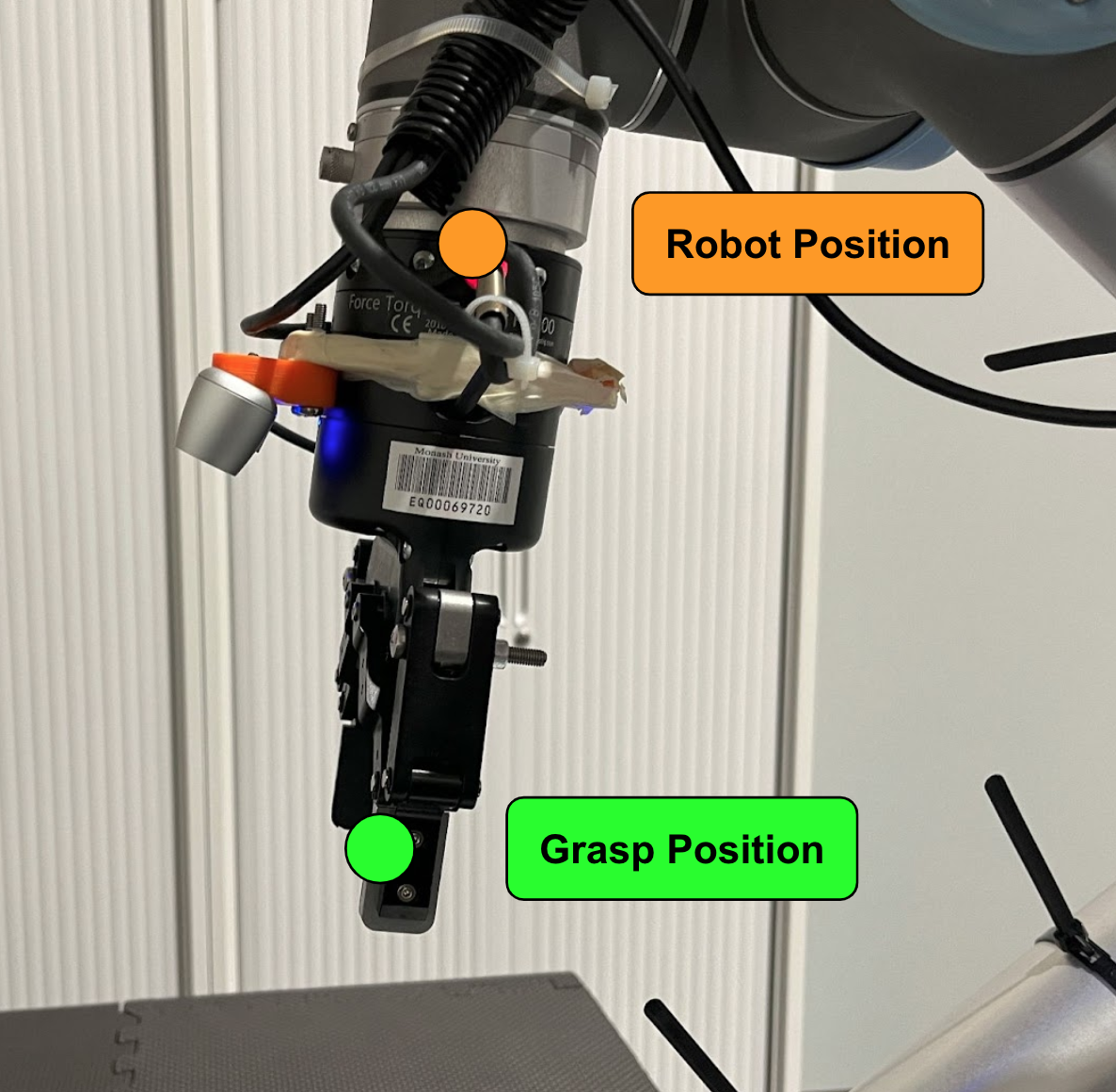

Here is our real robot setup

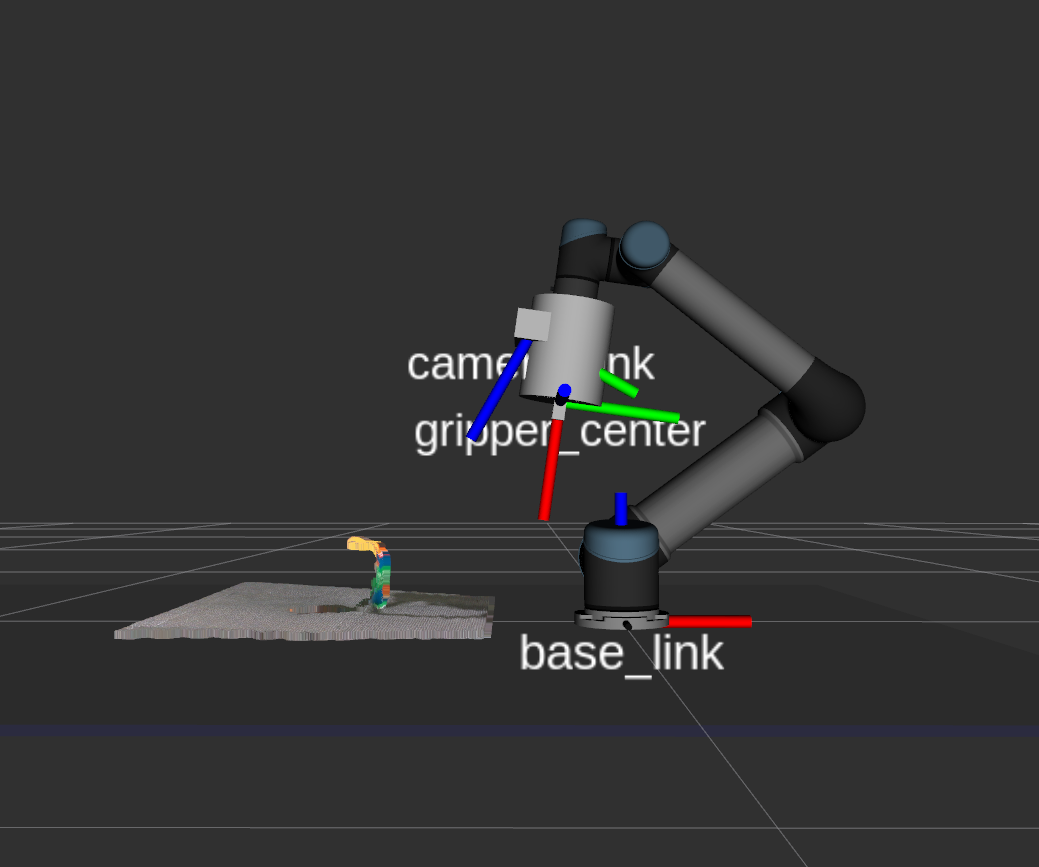

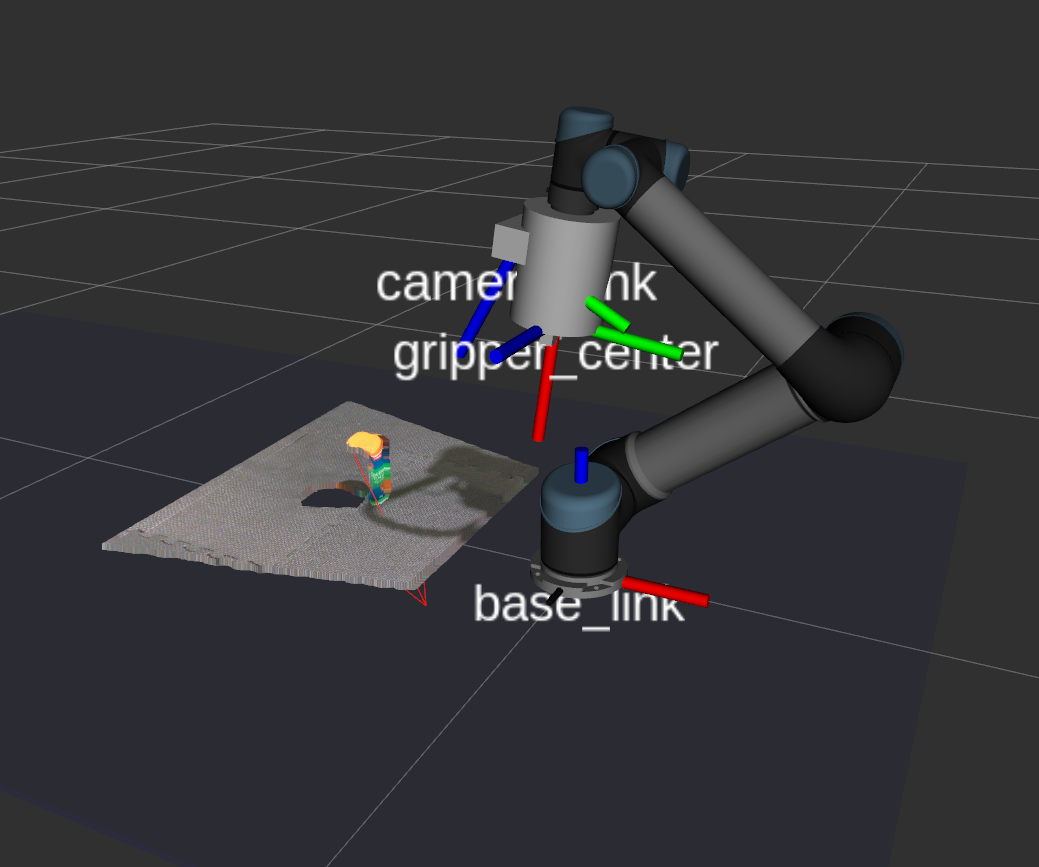

The frames utilised in our dataset are illustrated here in RVIZ.

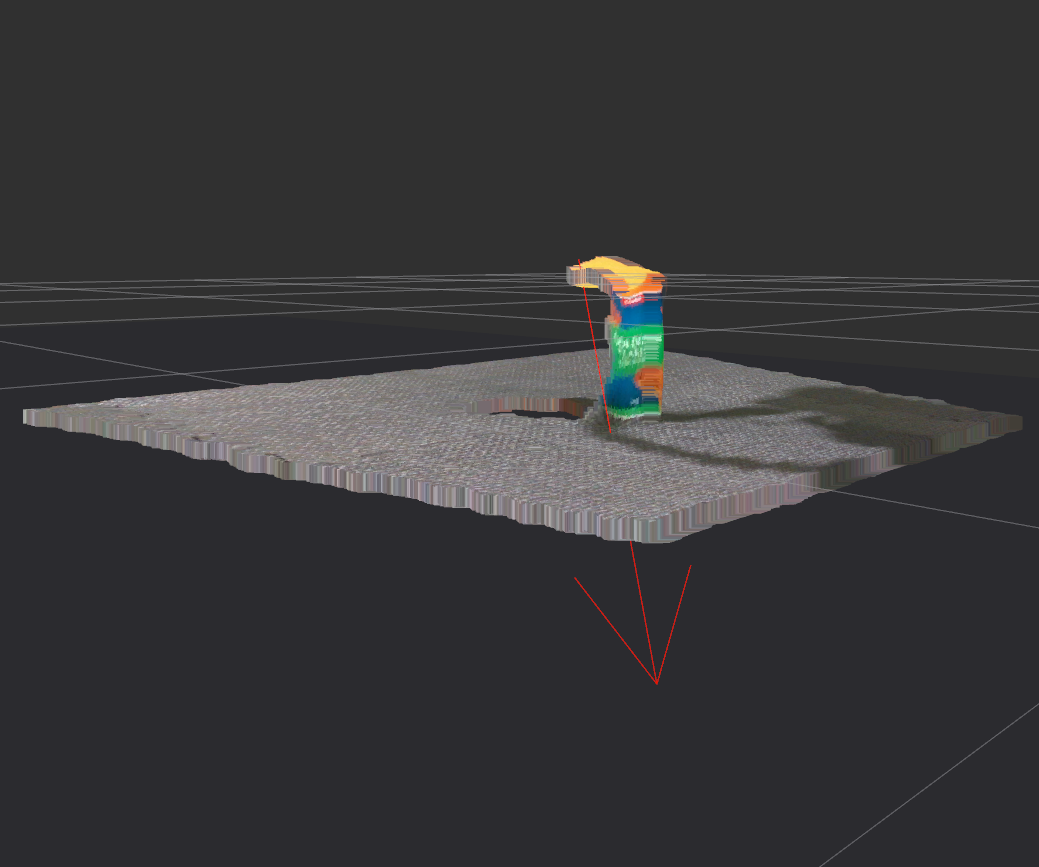

Here is an example of the grasp generated by agilegrasp, the red arrow represents the grasp pose consisting of position (x, y, z) and orientation (x, y, z, w). The base of the arrow is the position and the arrow illustrates the orientation.

Grasp position refers to the pose at the center of the gripper, whilst the Robot position refers to the pose of the UR5 end effector as illustrated in the image below.

[1] Andreas ten Pas and Robert Platt. Using Geometry to Detect Grasp Poses in 3D Point Clouds. International Symposium on Robotics Research (ISRR), Italy, September 2015.

This datset was developed by Monash University in conjunction with Coles Supermarkets.